In the context of the rapid development of cloud-native architecture today, object storage, as an elastic, highly available, and cost-controllable storage method, is widely used in scenarios such as log storage, backup and recovery, machine learning, and media resource management. Among numerous object storage solutions, MinIO has become the preferred choice for enterprises to deploy object storage in private cloud and even hybrid cloud environments due to its characteristics of Amazon S3 protocol compatibility, high performance, and open-source lightness.

KubeBlocks provides an operator-centric approach to running stateful services in Kubernetes. KubeBlocks can serve as an optional alternative to dedicated operators for a specific database or storage service. For MinIO specifically, community operator projects include examples such as the MinIO Operator (minio/minio-operator) and other storage operators like Rook (Ceph RGW) for object gateway use cases. KubeBlocks aims to simplify lifecycle management by providing a modular Addon model and unified operational capabilities.

With the development of cloud-native infrastructure, enterprises are increasingly incorporating object storage systems into the Kubernetes environment for unified management. Compared with traditional deployment methods, adopting an operator-based approach for MinIO offers significant advantages:

MinIO itself supports container images and Helm charts, and the community has released a MinIO Operator to achieve more standardized deployment and management through CRDs and Operator patterns. However, in actual enterprise-level use, MinIO's official operator or container-image-centric solutions still face some key issues, mainly including:

Complex configuration, high manual maintenance costs MinIO distributed deployment requires explicitly listing all node addresses in the startup parameters, and the node order is sensitive. Every node change (such as scaling, redeployment) requires manual modification of the address list. This approach is extremely unfriendly in Kubernetes and is not conducive to automated deployment and dynamic operations.

Limited horizontal scaling The native cluster node count of MinIO is fixed at initialization and cannot be expanded by simply adding Pods or nodes. If expansion is required, the entire cluster must be redeployed or a new Server Pool must be manually spliced, increasing system complexity and operational risks.

Weak lifecycle management capabilities Although some Operators provide basic CRD capabilities, many lack a unified lifecycle management specification. Operations such as version upgrades, parameter changes, backup and recovery, and status detection still rely on manual operations or external scripts, making it difficult to integrate into the platform's operation and maintenance system for unified management.

At the strong request of community users, KubeBlocks has added support for MinIO Addon. The following is an introduction to its usage and implementation details.

This section describes how to implement MinIO cluster management, high availability, horizontal scaling, and other functions.

By implementing the following interfaces, basic lifecycle management and operations for MinIO clusters can be achieved in KubeBlocks:

Next, we will mainly introduce the implementation of MinIO ComponentDefinition.

readwrite and notready. roles:

- name: readwrite

updatePriority: 1

participatesInQuorum: false

- name: notready # a special role to hack the update strategy of its

updatePriority: 1

participatesInQuorum: false

services:

- name: default

spec:

ports:

- name: api

port: 9000

targetPort: api

- name: console

port: 9001

targetPort: console

root account and its password generation policy. KubeBlocks will generate the corresponding account and password information into the corresponding secret. systemAccounts:

- name: root

initAccount: true

passwordGenerationPolicy:

length: 16

numDigits: 8

letterCase: MixedCases

MINIO_ROOT_USER for the MinIO administrator account name, MINIO_ROOT_PASSWORD for the MinIO administrator account password, etc. These variables will eventually be rendered into the Pod as environment variables. vars:

- name: MINIO_ROOT_USER

valueFrom:

credentialVarRef:

name: root

optional: false

username: Required

- name: MINIO_ROOT_PASSWORD

valueFrom:

credentialVarRef:

name: root

optional: false

password: Required

lifecycleActions:

roleProbe:

exec:

command:

- /bin/sh

- -c

- |

if mc config host add minio http://127.0.0.1:9000 $MINIO_ROOT_USER $MINIO_ROOT_PASSWORD &>/dev/null; then

echo -n "readwrite"

else

echo -n "notready"

fi

MinIO_REPLICAS_HISTORY and saves it to a ConfigMap for MinIO horizontal scaling (will be discussed later).runtime:

initContainers:

- name: init

command:

- /bin/sh

- -ce

- /scripts/replicas-history-config.sh

containers:

- name: minio

command:

- /bin/bash

- -c

- /scripts/startup.sh

At this point, a basic MinIO Addon is largely complete. The full implementation can be found at link.

MinIO natively achieves high availability through distributed deployment. Its core mechanism is to form a unified cluster with multiple nodes, using Erasure Coding technology to slice and redundantly encode data, ensuring that data can still be read and written even if some nodes or disks fail. Any node can receive client requests, the system has automatic recovery capabilities, and strict consistency is maintained. The overall architecture has no single point of failure and provides robust service high availability. Therefore, to ensure high availability, MinIO requires at least 4 nodes and recommends using an even number of nodes (such as 4, 6, 8, 16) to achieve balanced data redundancy.

To overcome the limitation of MinIO's fixed native node count, KubeBlocks designed and implemented an automated horizontal scaling mechanism in ComponentDefinition. This solution, by combining initContainer and ConfigMap, records and maintains the historical information of each replica count change, dynamically constructing the corresponding Server Pool addresses at container startup, thereby achieving continuous management and splicing of multi-stage replicas.

The core logic of the scaling process is as follows:

initContainer, read and maintain a ConfigMap key-value named MINIO_REPLICAS_HISTORY. This value records the history of each replica count change, for example: [4,8,16], representing that the cluster has undergone 3 expansions, and the total number of nodes in the cluster after each expansion.Example logic is as follows:

key="MINIO_REPLICAS_HISTORY"

cur=$(get_cm_key_value "$name" "$namespace" "$key")

new=$(get_cm_key_new_value "$cur" "$replicas")

...

# Update ConfigMap

kubectl patch configmap "$name" -n "$namespace" --type strategic -p "{\"data\":{\"$key\":\"$new\"}}"

for cur in "${REPLICAS_INDEX_ARRAY[@]}"; do

if [ $prev -eq 0 ]; then

server+=" $HTTP_PROTOCOL://$MINIO_COMPONENT_NAME-{0...$((cur-1))}.$MINIO_COMPONENT_NAME-headless.$CLUSTER_NAMESPACE.svc.$CLUSTER_DOMAIN/data"

else

server+=" $HTTP_PROTOCOL://$MINIO_COMPONENT_NAME-{$prev...$((cur-1))}.$MINIO_COMPONENT_NAME-headless.$CLUSTER_NAMESPACE.svc.$CLUSTER_DOMAIN/data"

fi

prev=$cur

done

By maintaining replica history and automatically generating multiple Server Pool address segments, KubeBlocks successfully bypasses the limitation of MinIO's fixed native cluster node count, achieving logical horizontal scaling while maintaining continuous cluster availability and data consistency.

Now let's deploy a MinIO cluster and perform some operation and maintenance changes. The version information used in this article is as follows:

kbcli addon install minio --version 1.0.0

kbcli cluster create minio minio-cluster --replicas 2

# Add two nodes

kbcli cluster scale-out minio-cluster --replicas 2 --components minio

After adding nodes, because the cluster's server pool address has changed, the cluster needs to be restarted so that old nodes can perceive the new server pool. When new nodes join the cluster, KubeBlocks will restart all old nodes so that they can perceive the new server pool.

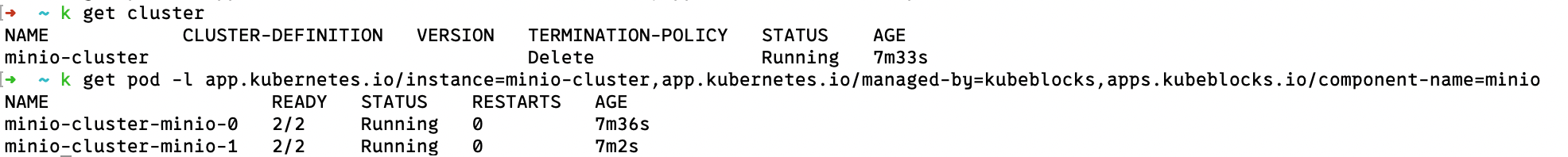

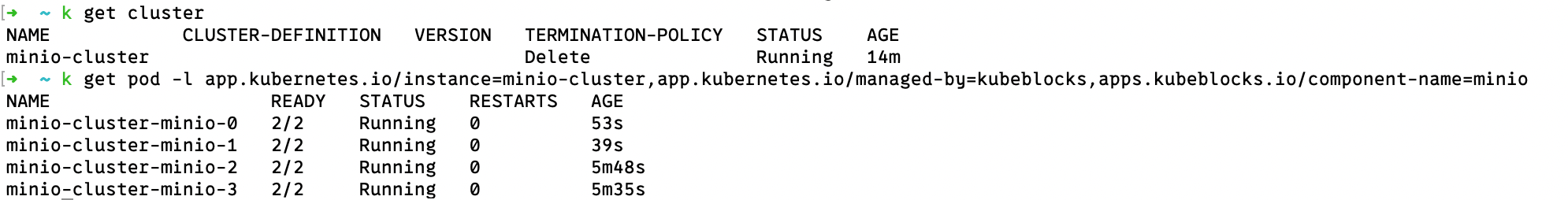

View cluster and pod status

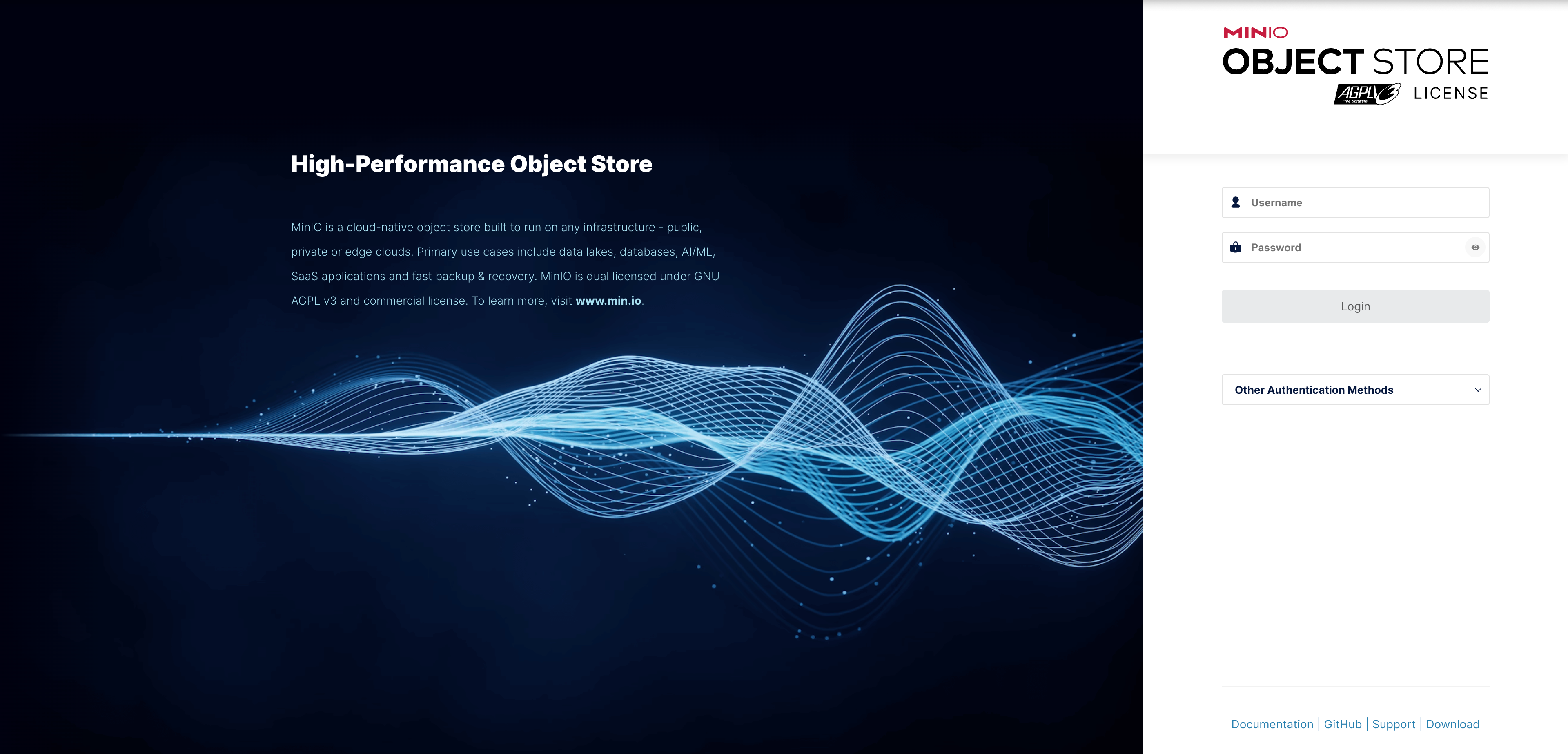

MinIO comes with a Web console (MinIO Console) when running in a container, which is used for background management and visual operations. This console defaults to listening on port 9001 and supports the following functions:

In KubeBlocks, the MinIO Console is exposed as a port in the services component, which users can access via NodePort, LoadBalancer, or Ingress. For example:

# Expose port

kubectl port-forward svc/minio-cluster-minio 9001:9001

Then open http://localhost:9001/login in your browser.

After MinIO is integrated into KubeBlocks as an Addon, it inherently supports many cluster operation and maintenance tasks. In addition to the horizontal scaling introduced above, it also supports the following operations:

Running MinIO in a cloud-native environment also faces challenges such as complex deployment, limited scalability, and cumbersome operations. KubeBlocks, as an open-source Kubernetes operator for stateful services, enhances MinIO's operator-based experience by providing automated cluster deployment, high service availability, and elastic expansion of Server Pools through its modular MinIO Addon. Nevertheless, MinIO still has room for optimization in terms of dynamic scaling flexibility and ecosystem compatibility. We will continue to refine these capabilities to help MinIO become a more competitive distributed storage solution in cloud-native scenarios.